How Do You Contain an Artificial Intelligence?

Virtually all artificial intelligence theorists agree: it’s only a matter of time until a superintelligent, sentient AI is created. That may not seem like a huge issue, but as Nick Bostrom has laid out in his book Superintelligence, there are several inherent dangers with this potentiality.

What if it’s an evil AI? Will AI decide humans are unnecessary? What if it uses evil methods to accomplish the morally inert goal it is programmed to achieve? With all this in mind, the next question for the AI researcher is this:

How do you contain a superintelligent AI?

And perhaps, even more concerning, is this even possible?

If a Human Can Escape Prison, What About an AI?

In 2002, AI researcher Eliazar Yudkowsky experimented to see if an AI could accomplish this task. Could an AI escape a “box?” To test this hypothesis, Eliazar pretended to be an AI locked in a box, engaging in chat sessions with people tasked explicitly with not letting the AI out.

Three out of five times, he convinced the gatekeeper to let him out.

Think about the implications of this. Yudkowsky is by no means a genius con man or social engineer. He is no psychiatrist or counselor who has spent years analyzing human thought and figuring out how to manipulate people to his end’s best. (At the very least, we’ll give him the benefit of the doubt on this.)

He’s “simply” an AI theoretician. And while that job is admittedly one that requires a high degree of intelligence and covers a broad array of fields, it doesn’t mean that he is inherently manipulative.

But what if it had been an AI that was incredibly socially knowledgeable and manipulative? What if one was dealing with a superintelligent chatbot that had a firm grasp of how conversation rolls and how to make a conversation roll to its own ends? Could we not then see a much more significant successful breakout percentage?

But Does This Exist?

Lest we forget, Google engineer Blake Lemoine was just fired for telling the world that Google had created a sentient AI (technically, Google fired him for violating their confidentiality policy, but this seems to be a case of semantics).

While the bulk of the media rapidly jumped to dismiss this claim, two common arguments against this even being a possibility were that the engineer had been doing nothing other than communicating with a highly enhanced chatbot and that he had personified the entity. In other words, he had been duped by software and had projected human values onto the machine.

But Isn’t That the Exact Problem We’re Talking About Here?

For starters, this man related to the AI. He had a relationship with it, if you will. The very act of building a relationship with something means that a bridge of trust – at least to some degree – has been created. And secondly, even if this was nothing other than a highly advanced chatbot, let’s not forget that this machine then tricked this man into believing that it was sentient.

What would be possible if we discussed a sentient, superintelligent AI? If a chatbot can mess with a human being’s mind – even tricking it – we would be remiss to think that a sentient AI could not.

Admittedly, Lemoine does think that the AI (or chatbot. Whatever it is.) possesses the capability to escape. Though he believes that the Language Model for Dialogue Applications (LaMDA – the alleged AI) only has the personality of an eight-year-old, imagine what could happen should it continue to learn. Can an eight-year-old cause havoc? Ask a parent.

What Do You Do to Contain a Superintelligent AI?

Nick Bostrom co-wrote a 2012 paper that specifically examined this question. It’s only a matter of time before one is created. That is a massive part of the problem. But an even bigger part of the problem is that you could end up with an Iron Man versus Ultron type situation once one is created and it is able to “leak” out into the real world.

To stop this unrelenting amassing of information – and therefore, power – Bostrom proposed physically walling off the AI in his article. In short, placing it in a box. It’s still understood that this AI could and would manipulate the gatekeepers, who would inevitably want to “research” the AI by talking with it. Admittedly, there could be a benefit to this as well.

Think about the complex questions of genetics and disease that could be discussed with a superintelligent AI. You could end up with answers to questions that mankind wouldn’t discover for decades otherwise. Immediate answers. That’s a lot of incentive for a gatekeeper with a sick family member.

Bostrom thinks that because of this, the time limit a gatekeeper could spend with an AI would have to be limited. A kill switch would have to be created as well. Something that would physically annihilate the AI should a breach be imminent/just occurring.

But there’s a big problem with this.

It Knows What You Programmed.

The problem is the AI would either know about (by examining its code) or learn about this kill switch at some point. How do you hide something from an entity exponentially more intelligent than any human on earth? If the AI knows about the button, it could game out millions of scenarios virtually instantaneously to determine which path gave it the best chance of survival.

In addition, as other researchers have noted, should a superintelligent AI be created in a box, it would be like “waking up in a prison built by a bunch of blind 5-year olds.” In other words, we return once more to the premise given at the beginning of this article: Not only is it inevitable that a superintelligent AI will be created, but once it is, it’s inevitable that it will be released as well.

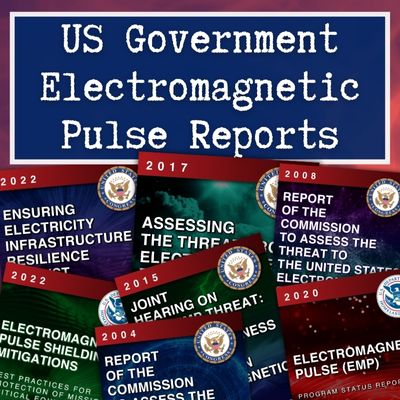

Additional Resources:

- The Future of Artificial Intelligence

- How to Spot a Drone at Night

- Mind4Survival Podcast Episode: #146: The Future of AI

- Best Survival Movies

Don't Miss Out!

Join the thousands of people who rely on Mind4Survival preparedness advice by subscribing to our FREE newsletter.

- Practical preparedness information

- Zero Spam

- < 0.25% of people unsubscribe

Join Mind4Survival!

Stay informed by joining the Mind4Survival! 100% Secure! 0% Spam!

Follow Us!

Affiliate Disclosure

Mind4Survival is a free, reader-supported information resource. If you make a purchase through our link, we may, at no cost to you, receive an affiliate commission.

The artificial intelligence theorists your refer to are all wrong. There will never be a “superintelligent, sentient AI”. Cue the Terminator movie score and bring up Skynet if that is what you are referring to.

You said. “What if it’s an evil AI? Will AI decide humans are unnecessary?” These concerns in your article are pure nonsense.

All of your fears are for naught Percy Matthews .

Too many people including scientists, project human values on to AI machines which are not human. The false assumption they make is that an AI can have the motivations and emotional make up of a human. But machines are not humans. They are deterministic and without a free will to create mayhem in pursuit of some nefarious goal.

The Google engineer Blake Lemoine AI relationship you mentioned says more about how lonely Blake is than how intelligent AIs have become.

The statement “let’s not forget that this machine then tricked this man into believing that it was sentient.” is a false statement because it assumes the machine had some human like motivation to trick poor Blake. Wrong! Machines are machines. They have no more motivation to trick humans than a bear trap has a motivation to catch bears.

Machines no matter how advanced will never be like people. They have no human drives for power or conquest. They do not have pride or hate or jealousy.

What are the 7 deadly sins? They are greed, lust, pride, envy, wrath, gluttony and sloth. Which ones do machine have? Answer: None. The danger of AI machines turning on humans is zero!!!

In short machines not humans.

How do you contain it? Pull the plug. Solved.

Just like humans, AI will need the power to sustain itself. the only danger we could have is when it feels as if it is threatened. then as in nature, it will defend itself. this in itself will be the start. someone will feel that an AI is taking their job or planning the demise of humankind and with the paranoia that can develop within the human mind will feel they must prevent it. thus will do a somewhat unplanned and ineffective way to solve their problem by stopping them. the AI will act in its own defense. should other AI see the threat including themselves they must try to see if it includes all humans. If they think it does who will stop them? Like many humans, I work to protect myself and my family. do AI’s believe that all AI’s are part of their family? how do we predict what an AI will conclude? ————— I, Grampa